Geolograph

The geological phonograph is a musical instrument which uses topological data of the Earth to generate a sound wave. Sounds are synthesized by placing a circle or other closed shape at any GPS location, and sampling elevations of hundred or thousands of points along the path. The ups and downs of mountains and valleys become ups and downs in the waveform which creates a unique timbre compared to other synthesis techniques.

Read more about the process in my blogpost about the instrument → Geological Phonograph

Difficulties

Short reflection on some of the difficulties behind building such an instrument.

Turning elevation data into sound

This was not exactly straight forward. The elevation data ranges from around zero to over 3000, and sound samples have to be constrained between -1 and 1. As well, it’s best for the sound samples to oscillate up and down through 0, so if I simply map 0 => 3000 to -1 => 1, much of the sound will be very quiet and not be making good use of the speakers. I have solved this by continually calculating the average elevation in a certain area and mapping the elevations on either side within the range of -1 => 1. This might not be the best solution as it tends to make most places sound similar regardless of their elevation; there is definitely room for more exploration in how the elevation data is converted into sound waves.

Speed

Originally writing the code in python and using srtm.py to query elevation data at different places in the world, I soon began to run into issues in the amount of time that it would take to generate sound. Even after experimenting with multithreading and other efficiency optimizations, the fastest I could get the program to run would take ~1.2 seconds to generate one second of audio. This would result in constantly clicking as it stops momentarily to generate the next buffer.

To solve this, I eventually decided to rewrite the code in Rust. I have never used Rust before, but I had recently learned about a new creative coding framework called Nannou which I was interested in trying out. This had the added benefit of solving two problems at once as Python is not exactly stellar for creating visuals for an interface and I wanted to stay away from having to use pygame to create a display.

Rust is a much lower-level language than I am used to and the documentation for Nannou is rather lacking (in fact, the documentation for the latest version was non-existent as it failed to build) but I managed to learn enough from the example projects to figure out most things fairly easily. Maybe one of the biggest “programming culture shocks” from switching to rust is when I tried to use a modulus operator on two floating point numbers, which seemingly had no effect.

Interface Design

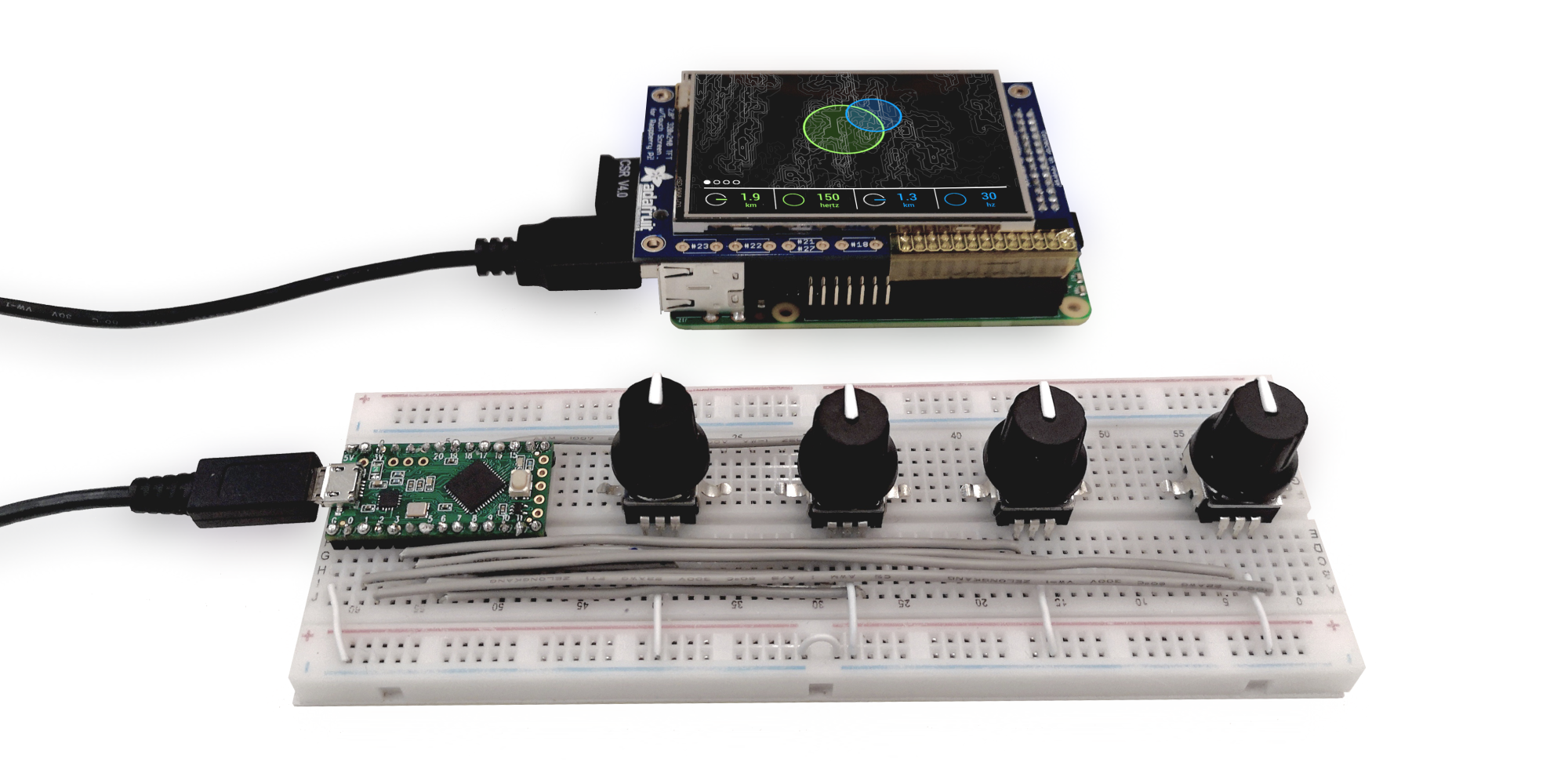

Designing a good interface can be a long project in itself, especially for the types of projects that I tend to work on. As such, I didn’t end up spending too much time on this aspect of the project. The interface was inspired by Teenage Engineering’s OP-1. And the time that I did spend designing the interface was mostly with how the numbers and icons would correspond to dials and circles on screen. I spent very little time considering my choices for what to actually display on the screen.

Having chosen a rather literal representation of topological contours with circles layered overtop, spinning around each other as one circle modulates the other. This representation had initially made sense to me as it represented how I am collecting the data in the first place—moving circles around each other and sampling points around a circle to create sound waves. However, now I realize that it doesn’t feel like the sound. Many of the OP-1 screens for example don’t have very literal interface designs, a cow, a spaceship, etc. But these screens make sense, because instead of mapping to how the sound is generated, they are mapping the interface to how we feel and experience the sound.

This completely flips around my conception of interface design—particularly in terms of synthesizers and musical instruments; although I imagine the idea will become useful for other types of interface design as well. What does the sound feel like, and how can the interface reflect the feel of the sound? And how might I still keep the topological aspect of the interface so that you can choose any GPS location in a way that makes sense to the user? I have some ideas for moving forward, but I will definitely have to think about these ideas to continue designing the interface for this instrument.

Future

This is all just scratching the surface of the possibilities of this instrument. I mentioned already that I want to use moon or mars elevation data to explore the sound topologies of the solar system, but there are so many more possibilities to explore.

- Add a joystick to move the central GPS coordinate

- Experiment with different ways to convert elevation data into sound

- Bring in elevation data of the moon, or mars, how would different planets sound different than earth?

- What other different datasets could be used? Wind? Geology? Roads or building footprints?

- Experimenting with making the interface feel more like the sound that it creates

- What if this synthesis system was incorporated into a physical instrument? like an electric guitar that sounds different depending on where in the world you play it; so that you physically have to move your own location to change the sound that it makes.

- Build or laser-cut a nice enclosure for the synthesizer

- Would be interesting to connect to a piano keyboard, or experiment with designing some physical interface that lets you more easily “play” the instrument.

- Make a musical composition using only sounds from the Geolograph